Have you ever wondered how AI tools like ChatGPT, powered by large language models (LLMs), manage to answer nearly any question posed by users, especially in open-domain queries that require extensive knowledge or up-to-date facts?

Relying solely on traditional LLMs to generate answers can be incredibly challenging. Here’s why:

1. Knowledge Limitations: LLMs are trained on vast amounts of data, but their knowledge is restricted to the information available in the training data. Moreover, the knowledge is frozen at the point of the last training update, meaning that LLMs may struggle with queries involving highly specialized or recently updated information.

2. Context Window Constraints: LLMs operate within a fixed context window, limiting their ability to process long texts or complex queries. This constraint poses a challenge when the model needs to integrate vast amounts of information spread across multiple sources.

3. Complexity in Reasoning: LLMs can struggle with tasks requiring complex reasoning, such as multi-hop inference across documents because they need to maintain a coherent reasoning chain within the context window.

Enter RAG: Retrieval-Augmented Generation

RAG (Retrieval-Augmented Generation) is an advanced method that combines information retrieval with generative models. It’s particularly effective for tasks requiring the integration of information from extensive external data sources to produce accurate and contextually relevant outputs. By incorporating RAG, LLMs can overcome many of their inherent limitations, allowing them to access a broader knowledge base, retrieve up-to-date information, and enhance reasoning across multiple documents.

The Technical Breakdown:

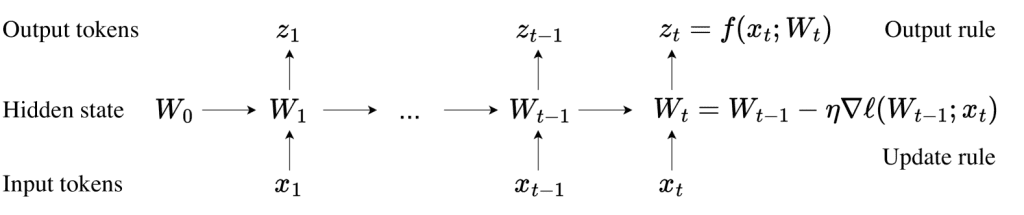

1. Query and Retrieval Module:

When a user inputs a query, RAG systems first pass it to the retrieval module, which searches predefined knowledge bases or document collections for the most relevant information. This is typically done using vector-space retrieval methods, such as BERT or dual encoder models, which embed both the query and documents into a shared vector space. The system then calculates the similarity between these vectors to select the most relevant document fragments.

2. Generation Module:

The retrieved document fragments are then combined with the original query and passed to the generation module, typically a pre-trained model like GPT-3 or T5. This model generates natural language answers, integrating the retrieved content into a coherent and contextually appropriate response.

3. Output the Generated Answer:

Finally, the generation module produces an answer relevant to the user’s query, incorporating key information from the retrieved data and presenting it in a clear, concise manner.

Key Components of RAG:

• Dual Encoder Model: Utilized during the retrieval phase, one encoder processes the query, and another processes document fragments. Their outputs are compared to identify the most relevant information.

• Vector Retrieval: This technique, involving methods like Nearest Neighbor Search, efficiently retrieves relevant content from large document databases.

• Generation Models: Tools like GPT-3, T5, or BART, which have been fine-tuned to generate coherent text based on the retrieved information.

• Seq2Seq Models: Often used in the generation process, transforming the combined input of the query and retrieved text into a well-structured answer.

• Reinforcement Learning and Tuning: Applied to optimize the quality of the generated output, ensuring it meets user expectations.

The Limitations of RAG:

While RAG offers powerful enhancements, it also presents several challenges:

• Complexity and Computational Cost: Integrating information retrieval systems with generative models adds complexity to the system, increasing the design and maintenance burden. The simultaneous execution of retrieval and generation tasks demands significant computational resources, particularly when dealing with large-scale datasets or extensive external databases.

• Handling Long Texts: The context window limitation of LLMs still poses challenges, particularly with lengthy documents where critical information might be lost in the middle, making simple retrieval methods insufficient for global queries.

• Hallucination Risk: Despite the enhanced retrieval of external data, RAG models can still generate plausible-sounding but incorrect information, especially when the retrieved content doesn’t perfectly align with the query.

Perplexity AI: Leveraging RAG for Enhanced Search

Perplexity AI, an AI-driven research and conversational search engine, harnesses the power of RAG to deliver comprehensive and accurate responses at lightning speed. Whether you’re looking to dive deep into a topic or explore specific details, Perplexity AI offers an efficient, all-encompassing solution. For instance, after watching the musical “Back to the Future,” Perplexity AI helped me quickly gather all the relevant information about the original film in one go, providing insights into how the DeLorean became a cultural icon.

As explained by Perplexity CEO Aravind Srinivas, RAG technology is at the heart of Perplexity AI, making it an indispensable tool for anyone looking to go beyond basic search engine capabilities and gain a fuller understanding of their queries. Click here to get a $10 discount on Perplexity Pro.

In conclusion, RAG represents a significant advancement in the field of natural language processing, bridging the gap between static knowledge and dynamic, context-aware information retrieval. It empowers tools like Perplexity AI to deliver more precise, contextually relevant, and up-to-date answers, making them essential in today’s fast-paced, information-driven world.