Reinforcement Learning from Human Feedback (RLHF) has been fundamental to training models like ChatGPT. However, as models advance, the quality of human feedback reaches a limit, hindering further improvements. OpenAI’s researchers have tackled this challenge by developing CriticGPT—a model trained to detect and correct subtle errors in AI-generated outputs.

🚀 How It Works:

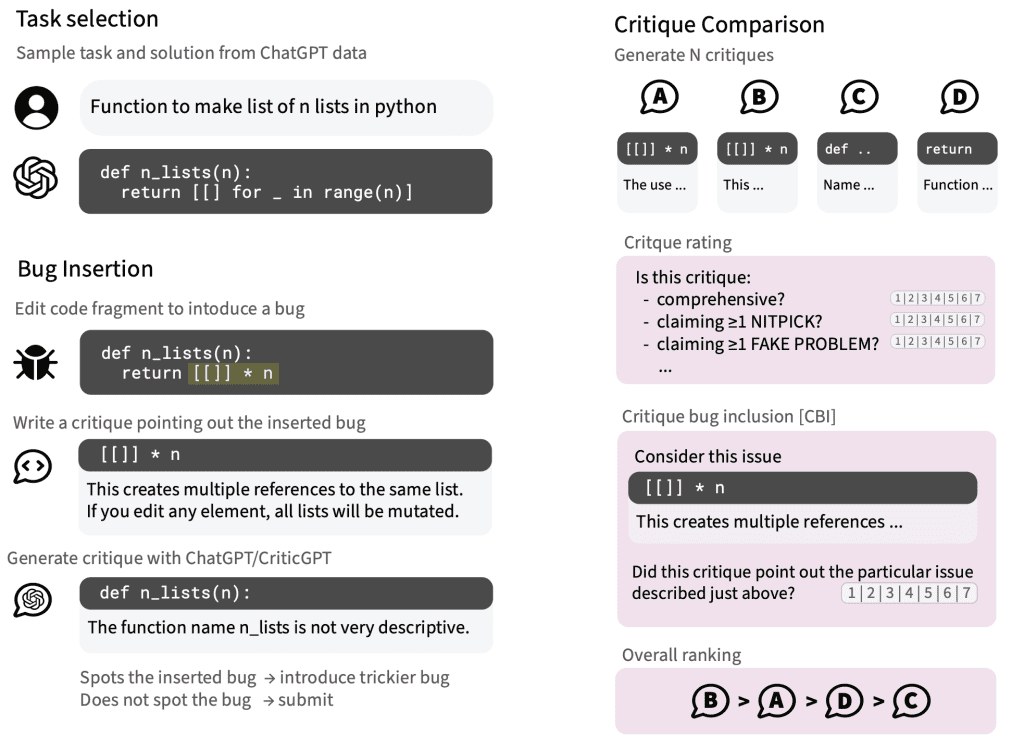

1. Tampering: Researchers inject subtle errors into ChatGPT’s outputs, ensuring high-quality and challenging training data.

2. CriticGPT Feedback: CriticGPT generates critiques of these tampered outputs, which are then evaluated by human experts on a 1-7 ordinal scale across dimensions like comprehensiveness, bug detection, and the presence of hallucinated bugs or nitpicks.

3. Optimization: By utilizing Proximal Policy Optimization (PPO) and Force Sampling Beam Search (FSBS), CriticGPT balances precision with comprehensiveness, reducing false positives while ensuring valuable feedback.

💡 Results:

• In evaluations, 63% of CriticGPT-generated critiques were preferred over human reviewers, particularly in detecting subtle code errors.

• While CriticGPT occasionally produces “hallucinated” issues, these can be mitigated through human-AI collaboration, resulting in more accurate and comprehensive error detection.

🤝 The Future of Human-AI Collaboration:

Combining the strengths of human reviewers and CriticGPT enhances performance and surpasses traditional optimization boundaries.

The era of human-AI collaboration is here!