As Large Language Models (LLMs) continue to evolve, their increasing complexity has brought a new set of challenges. One significant issue is the generation of outputs that are often vague, ambiguous, or logically inconsistent. These issues make it difficult for users to interpret and trust the AI’s reasoning. In response, OpenAI has introduced a novel method known as “Prover-Verifier Games,” designed to improve the clarity and verifiability of AI-generated content.

Click here to see the original page on Open AI.

Understanding Prover-Verifier Games

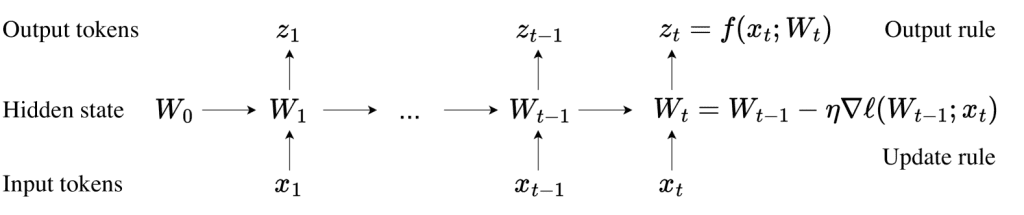

The Prover-Verifier framework involves two key components: the Prover model and the Verifier model. Here’s how the process works:

1. Prover Model Generates Output:

The Prover model, a large language model, is responsible for generating solutions or answers to given problems. These outputs can include complex reasoning, calculations, or factual information.

2. Verifier Model Checks Output:

A smaller, specialized Verifier model then reviews the outputs generated by the Prover. Its job is to assess the correctness and clarity of the content, identifying any errors, ambiguities, or inconsistencies.

3. Reinforcement Learning Process:

Through a reinforcement learning process, the Prover model is trained to produce outputs that are not only accurate but also easier for the Verifier to validate. The Verifier, in turn, learns to detect subtle errors and challenges the Prover to improve its outputs continually.

The Prover can receive higher rewards when it generates outputs that are both correct and easy to verify. This iterative feedback loop ensures that the Prover’s outputs become more robust and aligned with human expectations.

Key Benefits and Findings

Enhanced Verifiability:

By involving a Verifier model, the Prover-Verifier Games method significantly enhances the verifiability of AI-generated content. This means that outputs are not only correct but also presented in a way that is easy for humans or other AI systems to understand and verify.

Balanced Accuracy and Readability:

One of the primary goals of this method is to strike a balance between the accuracy of the content and its readability. The method helps in producing outputs that are clear and concise, without sacrificing the depth or correctness of the information.

Improved Alignment with Human Reasoning:

The interaction between the Prover and Verifier models helps the AI align more closely with human reasoning patterns. This leads to outputs that are not only accurate but also intuitively understandable, making it easier for users to follow the AI’s logic.

Limitations and Future Directions

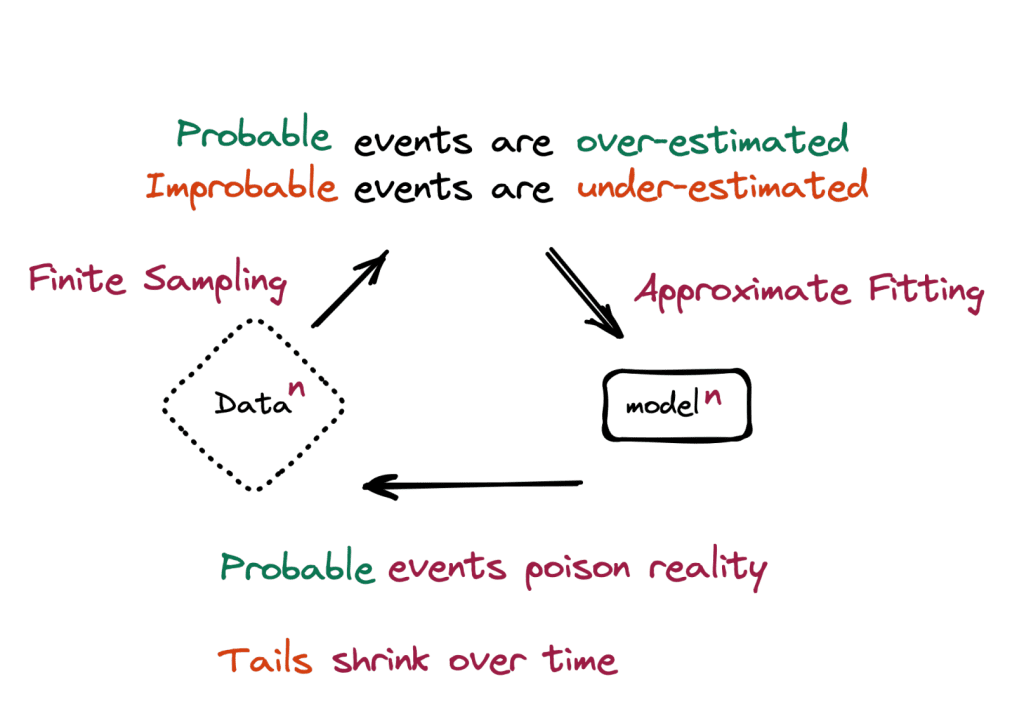

While the Prover-Verifier Games approach shows promise, it is not without limitations. Currently, the experiments have been primarily conducted on specific mathematical problem datasets. This raises questions about the method’s effectiveness across different domains and types of content. Further research is needed to validate its applicability to a broader range of tasks and datasets.

Moreover, the added complexity of training both a Prover and a Verifier model introduces additional computational overhead, which could be a consideration for large-scale deployments.

Conclusion

The Prover-Verifier Games method represents a significant step forward in making AI-generated content more transparent, trustworthy, and aligned with human expectations. By ensuring that outputs are not only accurate but also clear and verifiable, this approach addresses some of the critical challenges posed by the increasing complexity of LLMs.

As the field of AI continues to advance, methods like Prover-Verifier Games will be crucial in bridging the gap between machine-generated content and human interpretability. This balance is essential for building trust and ensuring that AI can be effectively integrated into decision-making processes across various industries.