Enhancing AI Output: Understanding Prover-Verifier Games

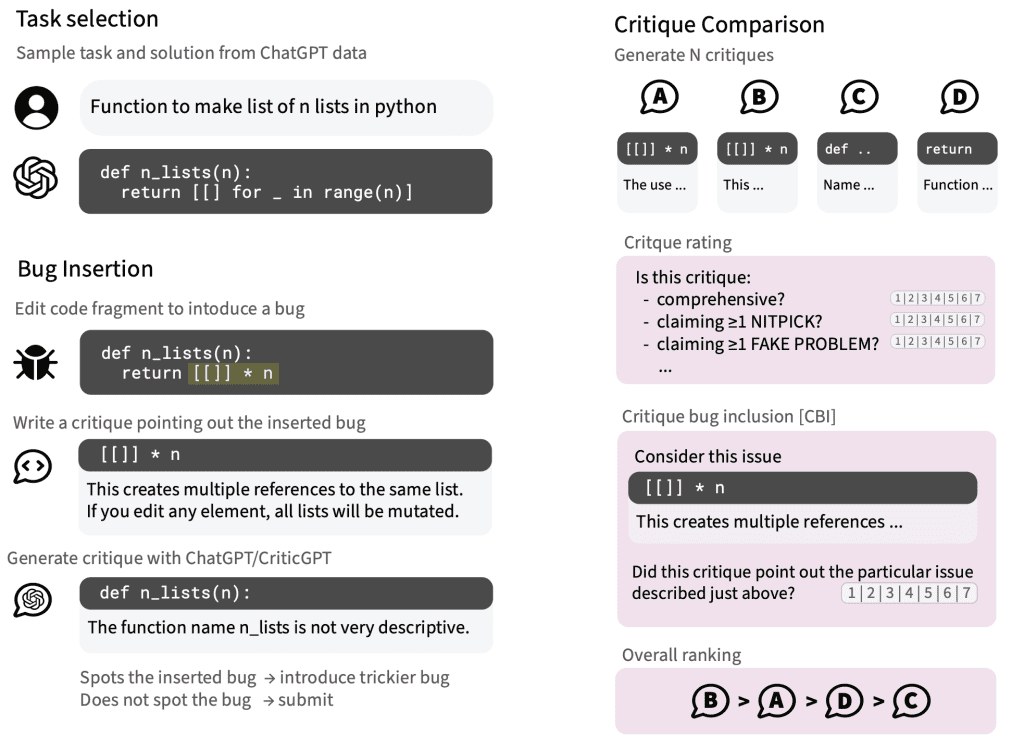

As Large Language Models (LLMs) continue to evolve, their increasing complexity has brought a new set of challenges. One significant issue is the generation of outputs that are often vague, ambiguous, or logically inconsistent. These issues make it difficult for users to interpret and trust the AI’s reasoning. In response, OpenAI has introduced a novel

Enhancing AI Output: Understanding Prover-Verifier Games Read More »

Research Highlights